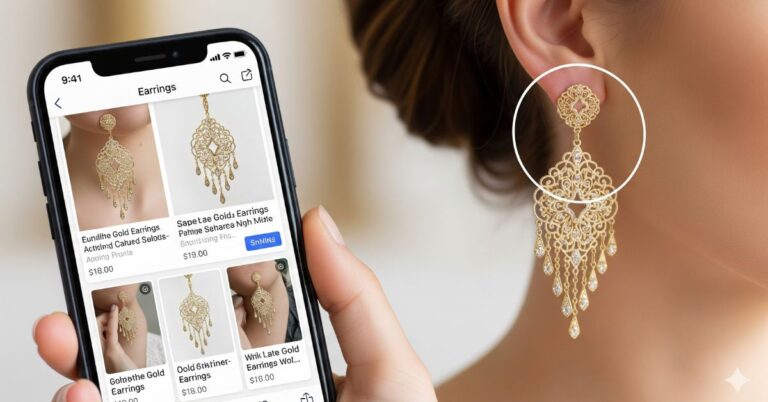

The new Amazon Lens Live visual search feature instantly scans real-world items and integrates the Rufus AI assistant to provide product summaries and answer questions directly in the camera view.

Trying to describe a unique visual item with just words is a common frustration for shoppers. This “keyword guesswork” often leads to irrelevant results and abandoned searches, a critical issue when more than half of consumers say visuals are more important than text.

For the 36% of shoppers already using visual search, a clunky process is a dealbreaker. Amazon is tackling this friction head-on with its new Lens Live feature, which transforms a customer’s phone into a powerful visual search tool that directly connects what they see with what they can buy.

Amazon Unveils Lens Live with Real-Time Scanning and AI Assistance

PC MAG"Amazon is making it easier to shop for items you don’t know the name of or can’t describe appropriately with Lens Live."

Recently, Amazon announced a significant upgrade to its visual search capabilities, designed to make product discovery faster and more intuitive. This enhancement introduces Lens Live, a new experience powered by artificial intelligence that builds upon the existing Amazon Lens tool.

When customers with the feature open Amazon Lens, their camera instantly begins scanning for products in their environment. Top matching items are then immediately displayed in a swipeable carousel at the bottom of the screen, allowing for rapid comparisons.

The new interface allows users to perform several key actions without ever leaving the camera view. These functions streamline the shopping process from initial discovery to final decision.

- Tap an item within the camera’s frame to focus on a specific product.

- Add an item directly to the shopping cart by tapping a plus icon.

- Save a product to a wish list using a heart icon.

To provide deeper insights, Amazon has integrated its AI shopping assistant, Rufus, into the experience. Below the product carousel, Rufus presents suggested questions and concise summaries to help shoppers research items efficiently.

This powerful functionality runs on AWS services, including Amazon OpenSearch and Amazon SageMaker, to deploy machine learning models at scale. A computer vision model detects objects in real-time, while a deep learning model matches them against billions of products in Amazon’s catalog.

Amazon Lens Live is currently available for tens of millions of US customers on the iOS Amazon Shopping app, with a wider rollout planned for the coming months. Customers who prefer the traditional methods can still use Amazon Lens by taking a picture, uploading an image, or scanning a barcode.

Lens Live, Amazon's Strategic Push into AI Shopping

According to TechCrunch, Amazon is increasing its investment in AI-powered shopping experiences with the launch of its new Lens Live feature. This tool serves as an AI-driven upgrade to the platform’s existing Amazon Lens visual search capability.

The development allows consumers to discover products through real-time visual search, positioning Amazon’s offering similarly to competitors like Google Lens and Pinterest Lens. It also integrates with the company’s AI shopping assistant, Rufus, to provide users with deeper product insights.

Lens Live adds a real-time component to the platform’s visual search tools, but it does not replace the original function. Customers can still use the traditional Amazon Lens by taking a picture, uploading an image, or scanning a barcode.

This launch is one of several recent AI initiatives from the company aimed at enhancing the online shopping experience.

Lens Live Builds on Recent Visual Search Enhancements

Earlier this year, Amazon also featured its visual search innovation, reporting a 70% year-over-year increase in its usage worldwide. This growth was driven by a series of new features designed to make shopping simpler and more enjoyable for customers.

These enhancements expanded the capabilities of Amazon’s search tools, laying the groundwork for more advanced, real-time features like Lens Live. The recent updates focused on making search more specific, accessible, and visually driven.

- Visual Suggestions – When a customer types a visual description like “flannel shirt,” descriptive image suggestions now appear in the search bar. Users can select the image that best matches what they are looking for to see more relevant products.

- Add Text to Image Search – Users can now refine an image search by adding specific text commands. For example, they can upload a photo of a sofa and add text to specify a different color, brand, or material.

- Lock Screen Widget – For iOS users, a new widget allows the Amazon Lens camera to be launched directly from the phone’s lock screen. This feature provides immediate access to real-time visual search without needing to open the full app.

- More Like This – On any product image within the search results, customers can tap a “More Like This” button. This action quickly surfaces a variety of visually similar products, speeding up the discovery of alternative items.

- Videos in Search – For categories like electronics and home goods, product videos can now be played directly within the search results page. This allows shoppers to view products in action without having to click through to the product detail page.

- Circle to Search – After uploading an image to Amazon Lens, customers can now draw a circle to isolate a specific item within the picture. This function allows them to search for a single object in a complex image with multiple items.

The Rise of Visual Search in Ecommerce

Data Bridge Market Research reported that the global visual search market was valued at USD 41.72 billion in 2024 and is projected to reach USD 151.60 billion by 2032, growing at a CAGR of 17.50%. Rapid adoption in the e-commerce sector is a key driver of this growth, fueled by customer demand for more intuitive ways to shop.

Kacper Rafalski wrote in his article on Netguru that traditional text-based search often falls short when customers want to purchase something they spot in real life. Studies show that 62% of millennials and Gen Z consumers are interested in visual search, and more than half of surveyed shoppers are open to engaging with shoppable content across platforms.

The technology has proven especially powerful in visually driven categories, such as fashion, home décor, and art. Retailers such as H&M, Flipkart, and Myntra have already added visual search capabilities, enabling customers to find products through images instead of text.

How it works:

- Object Detection – AI systems identify key features of an item—color, shape, texture, and pattern.

- Machine Learning Algorithms – These models match detected objects to retailer inventory, surfacing relevant product options.

- LLM Integration – Pairing large language models with computer vision connects visual inputs with product databases, with APIs like Google Vision AI supporting seamless deployment.

Benefits for retailers:

- Enhanced Customer Experience – Easier discovery by removing the need for precise text descriptions.

- Increased Conversion Rates – Shortens the path from inspiration to purchase.

- Expanded Discovery – Shoppers are exposed to items they might not have found otherwise.

- Cross-Selling Opportunities – Features like “Complete the Look” recommend complementary items and drive higher order values.

The rapid evolution of visual search demonstrates its potential to reshape how customers shop and how retailers design digital experiences. As adoption accelerates, it is becoming less of a niche tool and more of a standard expectation in online retail.

The Competitive Landscape of Visual Search in Retail

Amazon is not the only retailer experimenting with visual search. Competitors like Walmart, eBay, Target, and even independent Shopify stores have already introduced their own versions of the feature, though with varying degrees of accessibility and ease of use.

Walmart launched Trendsetter visual search tool in 2022, but access is limited. Instead of being integrated into the Walmart app, it is only available through the separate TrendGetter.com website.

- How it works – Upload or snap a photo of a product, and the tool will scan Walmart’s catalog for similar items.

- This separation makes the process more tedious, as noted by industry observers.

eBay rolled out its Image Search feature in 2017 within its mobile app.

- Users tap the camera icon next to the search bar to take or upload a photo.

- The platform then surfaces visually similar listings, with filters available to refine by price, color, or condition.

Target became an early adopter in 2017 by integrating Pinterest Lens into its app.

- Shoppers inside a Target store can snap a picture of a product to find matching or similar options online.

- This allows customers to browse beyond the store’s shelves and see the retailer’s wider catalog.

Shopify merchants can also take advantage of visual search technology to improve customer experience and drive higher conversions. By installing specialized apps from the Shopify App Store, sellers can add AI-powered image search directly into their storefronts.

With this feature, shoppers can upload an image and instantly see visually similar products from the store’s catalog. The tool works particularly well for customers who arrive with a product in mind but prefer a faster, more intuitive way to find it.

Key features available through Shopify’s visual search apps include:

- One-click setup for easy integration

- Customizable Smart Visual Search widget directly in the theme’s settings

- User-friendly interface designed for quick adoption

- Fast and accurate apparel search engine optimized for style-based browsing

- Automatic catalog sync to keep search results aligned with inventory

This functionality not only simplifies product discovery but also boosts user engagement and satisfaction. Many retailers report higher conversion rates when shoppers can bypass traditional text search and connect with products visually.

For sellers who want to expand beyond Amazon, an Amazon agency that specializes in both marketplace growth and direct-to-consumer strategies can help integrate visual search into a Shopify store. With expert support, brands can extend the same kind of discovery experience found in Amazon Lens Live to their own websites, strengthening their DTC channel while maintaining marketplace sales.

Data Bridge Market Research"Consumers prefer searching with images over text, especially in fashion, home décor, and e-commerce, prompting businesses to integrate advanced visual recognition technologies."